We had an outage in our Tenant Service API which led to a broad outage of many of our APIs and the Cloudflare Dashboard.

The incident’s impact stemmed from several issues, but the immediate trigger was a bug in the dashboard. This bug caused repeated, unnecessary calls to the Tenant Service API. The API calls were managed by a React useEffect hook, but we mistakenly included a problematic object in its dependency array. Because this object was recreated on every state or prop change, React treated it as “always new,” causing the useEffect to re-run each time. As a result, the API call executed many times during a single dashboard render instead of just once. This behavior coincided with a service update to the Tenant Service API, compounding instability and ultimately overwhelming the service, which then failed to recover.

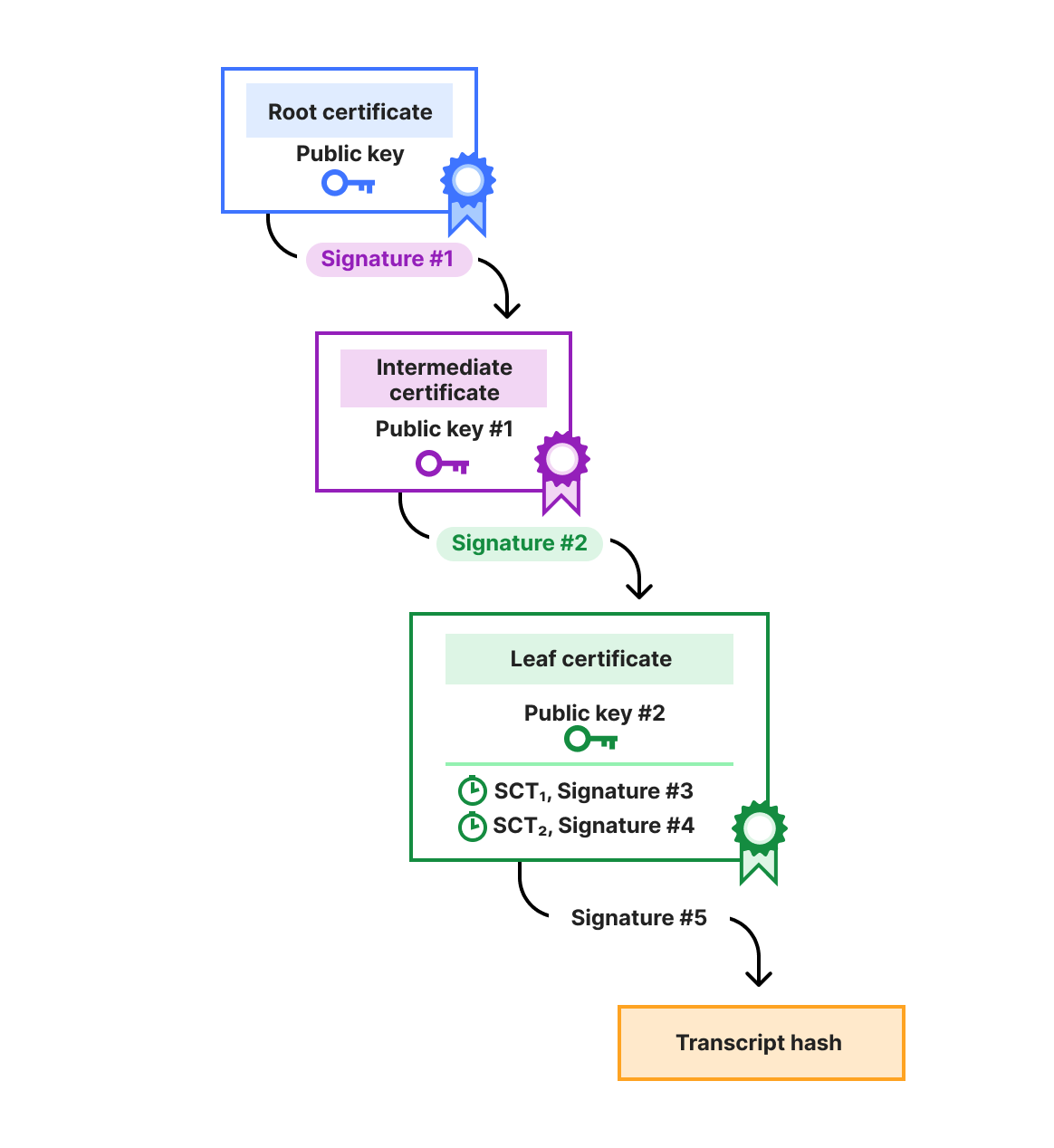

When the Tenant Service became overloaded, it had an impact on other APIs and the dashboard because Tenant Service is part of our API request authorization logic. Without Tenant Service, API request authorization can not be evaluated. When authorization evaluation fails, API requests return 5xx status codes.

We’re very sorry about the disruption. The rest of this blog goes into depth on what happened, and what steps we are taking to prevent it from happening again.

|

Time |

Description |

|---|---|

|

2025-09-12 16:32 |

A new version of the Cloudflare Dashboard is released which contains a bug that will trigger many more calls to the /organizations endpoint, including retries in the event of failure. |

|

2025-09-12 17:50 |

A new version of the Tenant API Service is deployed. |

|

2025-09-12 17:57 |

The Tenant API Service becomes overwhelmed as new versions are deploying. Dashboard Availability begins to drop IMPACT START |

|

2025-09-12 18:17 |

After providing more resources to the Tenant API Service, the Cloudflare API climbs to 98% availability, but the dashboard does not recover. IMPACT DECREASE |

|

2025-09-12 18:58 |

In an attempt to restore dashboard availability, some erroring codepaths were removed and a new version of the Tenant Service is released. This was ultimately a bad change and causes API Impact again. IMPACT INCREASE |

|

2025-09-12 19:01 |

In an effort to relieve traffic against the Tenant API Service, a temporary ratelimiting rule is published. |

|

2025-09-12 19:12 |

The problematic changes to the Tenant API Service are reverted, and Dashboard Availability returns to 100%. IMPACT END |

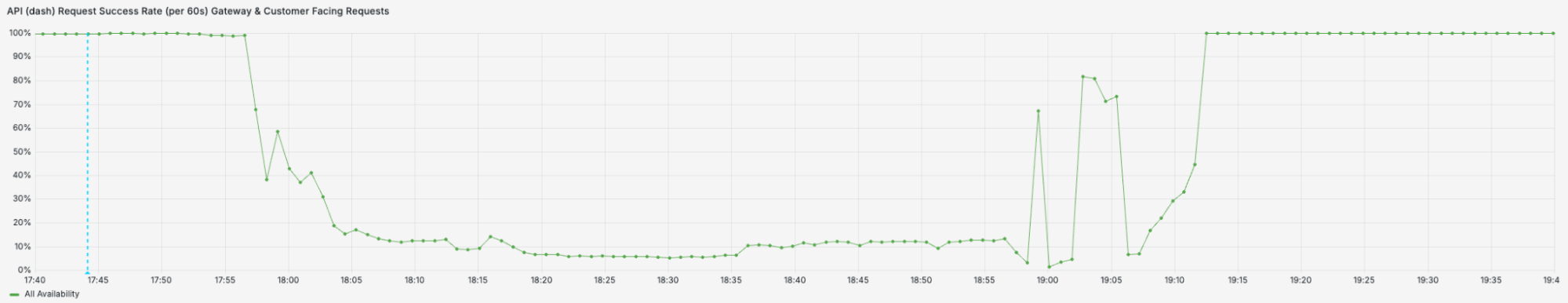

The Cloudflare dashboard was severely impacted throughout the full duration of the incident.

The Cloudflare API was severely impacted for two periods during the incident when the Tenant API Service was down.

Our first goal in an incident is to restore service. Often that involves fixing the underlying issue directly, but not always. In this case we noticed increased usage across our Tenant Service, so we focused on reducing the load and increasing the available resources. We installed a global rate limit on the Tenant Service to help regulate the load. The Tenant Service is a GoLang process that runs on Kubernetes in a subset of our datacenters. We increased the number of pods available as well to help improve throughput. While we did this, we had others on the team continue to investigate why we were seeing the unusually high usage. Ultimately, increasing the resources available to the tenant service helped with availability but was insufficient to restore normal service.

After the Tenant Service began reporting healthy again and the API largely recovered, we still observed a considerable number of errors being reported from the service. We theorized that these were responsible for the ongoing Dashboard availability issues and made a patch to the service with the expectation that it would improve the API health and restore the dashboard to a healthy state. Ultimately this change degraded service further and was quickly reverted. The second outage can be seen in the graph above.

It’s painful to have an outage like this. That said, there were a few things that helped lessen the impact. Our automatic alerting service quickly identified the correct people to join the call and start working on remediation. Additionally, this was a failure in the control plane which has strict separation of concerns from the data plane. Thus, the outage did not affect services on Cloudflare’s network. The majority of users at Cloudflare were unaffected unless they were making configuration changes or using our dashboard.

We believe it’s important to learn from our mistakes and this incident is an opportunity to make some improvements. Those improvements can be categorized as either ways to reduce / eliminate the impact of a similar change or as improvements to our observability tooling to better inform the team during future events.

We use Argo Rollouts for releasing, which monitors deployments for errors and automatically rolls back that service on a detected error. We’ve been migrating our services over to Argo Rollouts but have not yet updated the Tenant Service to use it. Had it been in place, we would have automatically rolled back the second Tenant Service update limiting the second outage. This work had already been scheduled by the team and we’ve increased the priority of the migration.

When we restarted the Tenant Service, everyone’s dashboard began to re-authenticate with the API. This caused the API to become unstable again causing issues with everyone’s dashboard. This pattern is a common one often referred to as a Thundering Herd. Once a resource or service is made available, everyone tries to use it all at once. This is common, but was amplified by the bug in our dashboard logic. The fix for this behavior has already been released via a hotfix shortly after the impact was over. We’ll be introducing changes to the dashboard that include random delays to spread out retries and reduce contention as well.

Finally, the Tenant Service was not allocated sufficient capacity to handle spikes in load like this. We’ve allocated substantially more resources to this service, and are improving the monitoring so that we will be proactively alerted before this service hits capacity limits.

We immediately saw an increase in our API usage but found it difficult to identify which requests were retries vs new requests. Had we known that we were seeing a sustained large volume of new requests, it would have made it easier to identify the issue as a loop in the dashboard. We are adding changes to how we call our APIs from our dashboard to include additional information, including if the request is a retry or new request.

We’re very sorry about the disruption. We will continue to investigate this issue and make improvements to our systems and processes.